The Rise of AI in Legal Transactions – A Double-Edged Sword

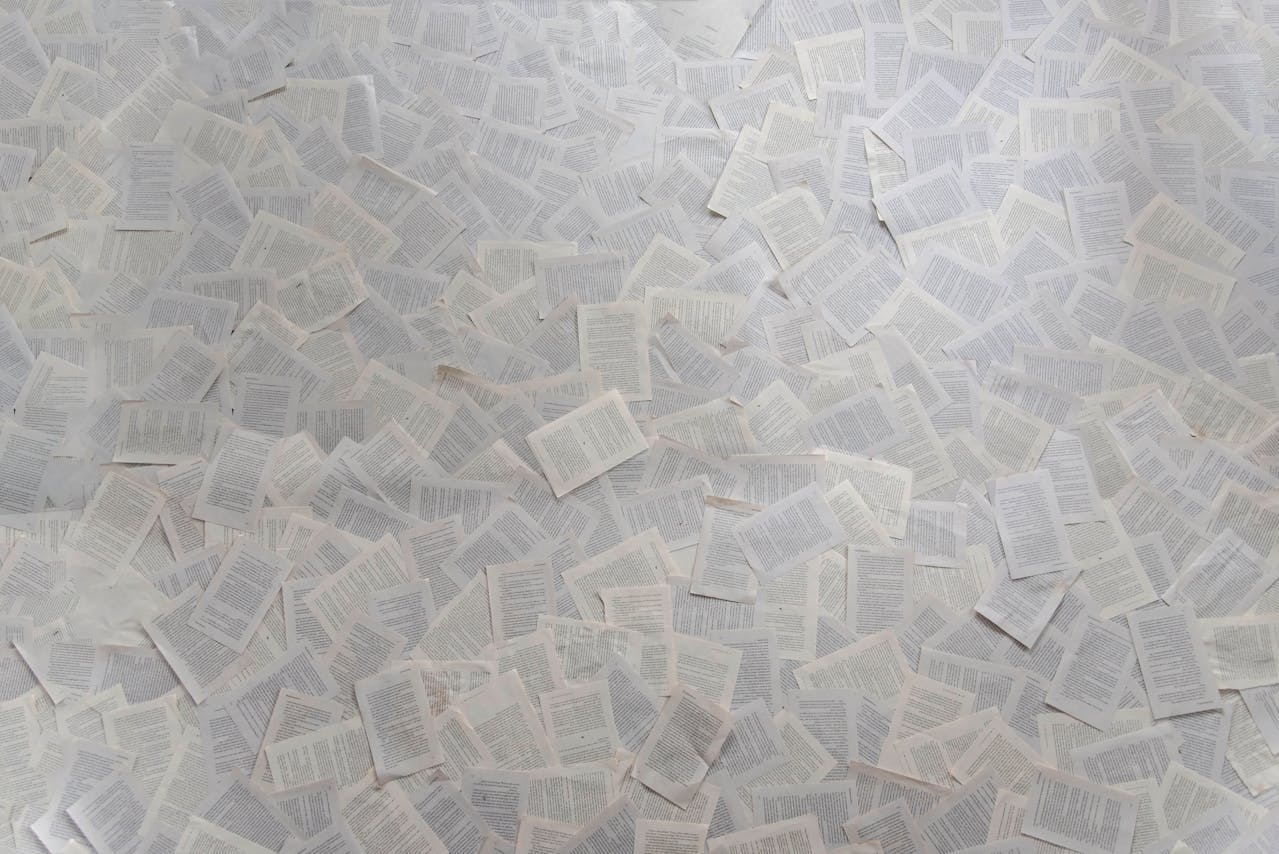

Artificial intelligence is transforming legal practice, offering unprecedented document review, contract drafting, and due diligence efficiencies. However, AI is increasingly being misused in high-value commercial transactions to generate excessive, often unnecessary, legal documentation. Instead of streamlining deals, some large firms are deploying AI to produce vast amounts of contract markups, dense due diligence reports, and endless revisions—often serving no real purpose beyond justifying high hourly fees.

A Conversation on LinkedIn with a BigLaw Consultant

This blog post actually started last year in conversation with a BigLaw Consultant through LinkedIn, where I posed the following proposition:-

“Would this work as a possibility : Introducing a requirement for firms to declare when and where AI has been used to produce text or analysis, enabling the other side to assess the nature of the output and deploy appropriate resources? Similar to Brian Inkster’s The Time Blawg where he notes ChatGPT hasn’t been used? Take it we’re not there yet amongst law firms for AI-use transparency?”

Said BigLaw Consultant then noted:-

“Thanks Gav. I see that discussion, both here and with clients, quite a lot – and I’m not sure where I stand on it tbh. For one of the examples I had in mind in yesterday’s comment, I don’t think the issue was had AI been used or had it been disclosed…

More that firm A just dumped the output on firm B to wade through. I thought that approach questionable whether it was AI that produced it, or an army of paralegals.“

So from this follows discussion on the effects and dangers of such an approach and its impact on various types of people concerned.

The Billable Hour Problem – AI as a Volume Generator

The traditional billable hour model rewards volume, not efficiency. AI’s ability to produce extensive legal content in seconds has exacerbated this issue, leading to inflated workloads that must still be reviewed by human lawyers—at premium rates. This misalignment creates a paradox where technology, meant to improve efficiency, is instead being used to manufacture inefficiency.

Clients are left to pay for AI-generated documents that add little value, while in-house legal teams and smaller counterparties are buried under a mountain of legalese. The net result? Longer transactions, greater costs, and increased frustration on all sides.

The Hidden Cost: Lawyer Burnout and Training Concerns

AI-driven overproduction is not only an issue for clients—it is unsustainable for lawyers themselves. Junior associates and support staff are being reduced to AI proofreaders rather than gaining meaningful legal experience. Instead of learning negotiation tactics or strategic thinking, they are reviewing and refining AI-generated content, often at the expense of their long-term professional development.

The pressure to manage this flood of information also contributes to burnout, with lawyers reviewing unnecessary details more than engaging in substantive, value-driven legal work.

An Environmental Concern: The Digital Waste of AI-Generated Documents

Beyond the human and economic toll, AI-generated waffle has an environmental impact. AI-driven legal work demands significant computing power, increasing energy consumption across firms. The legal sector, historically paper-heavy, now risks becoming an energy-heavy industry with little thought given to the sustainability implications of excessive document generation.

Ethical Considerations – AI’s Influence on Legal Standards

Most lawyers adhere to high ethical and professional standards, ensuring they act in their clients’ best interests and the legal process’s integrity. However, as AI continues to reshape legal work, the growing involvement of AI consultants—who may not be bound by the same professional obligations—raises concerns. When AI is used indiscriminately to generate unnecessary work for billing purposes rather than for legal necessity, the profession risks losing sight of its core purpose: delivering clear, effective legal advice.

Inkster’s Law and the AI Accuracy Challenge

This growing issue also aligns with Inkster’s Law, a term coined by yours truly, which suggests that the time required to check for accuracy in AI-generated material follows a 2:1 ratio—meaning that for every hour AI saves in document production, lawyers often need two hours to verify its accuracy. In the context of large commercial transactions, this creates a fundamental contradiction: while AI can generate vast amounts of legal text at speed, the process of reviewing, refining, and ensuring its reliability becomes even more time-intensive.

The sheer volume of AI-generated content does not just overwhelm counterparties—it burdens the very lawyers expected to “streamline” transactions. Instead of reducing inefficiencies, AI’s misuse in big law often compounds them, making commercial deals slower, costlier, and less effective.

Recognising and addressing this flaw is crucial if AI is to be deployed in a way that genuinely enhances legal practice rather than undermining it.

A Smarter Way Forward – Using AI Conscientiously

Law firms must rethink their approach. AI should be deployed to enhance legal practice, not to artificially inflate workloads. Real value in legal services comes from expertise, judgment, and strategic thinking—not from drowning counterparties in excessive AI-generated documentation.

Clients, too, have a crucial role to play. They should demand greater transparency in how AI is used in legal matters and advocate for value-based pricing models that prioritise efficiency over unnecessary complexity.

AI for Law Firms – A Guide to Conscientious Use

AI has the power to transform the legal profession for the better—if used responsibly. To help firms make informed choices, see our guide to some of the best AI tools for law firms, designed to be used conscientiously to enhance efficiency rather than create unnecessary work.

Any further thoughts on the subject? It would be great to hear from you: https://uk.linkedin.com/in/gavward